Tf.keras.mixed_precision.LossScaleOptimizer if you use the 'mixed_float16' Note: pile will automatically wrap an optimizer with a Passing a dtype policy name to a layer is equivalent to passing theĬorresponding policy, so it is never necessary to explicitly construct a Passing dtype=tf.keras.mixed_precision.Policy('float32'). In the example above, passing dtype='float32' to the layer is equivalent to dtype_policy > # Set policy back to initial float32 for future examples. Dense ( 10, dtype = 'float32' ) > layer2. dtype_policy # `layer1` will automatically use mixed precision > # Can optionally override layer to use float32 instead of mixed precision. set_global_policy ( 'mixed_float16' ) > layer1 = tf.

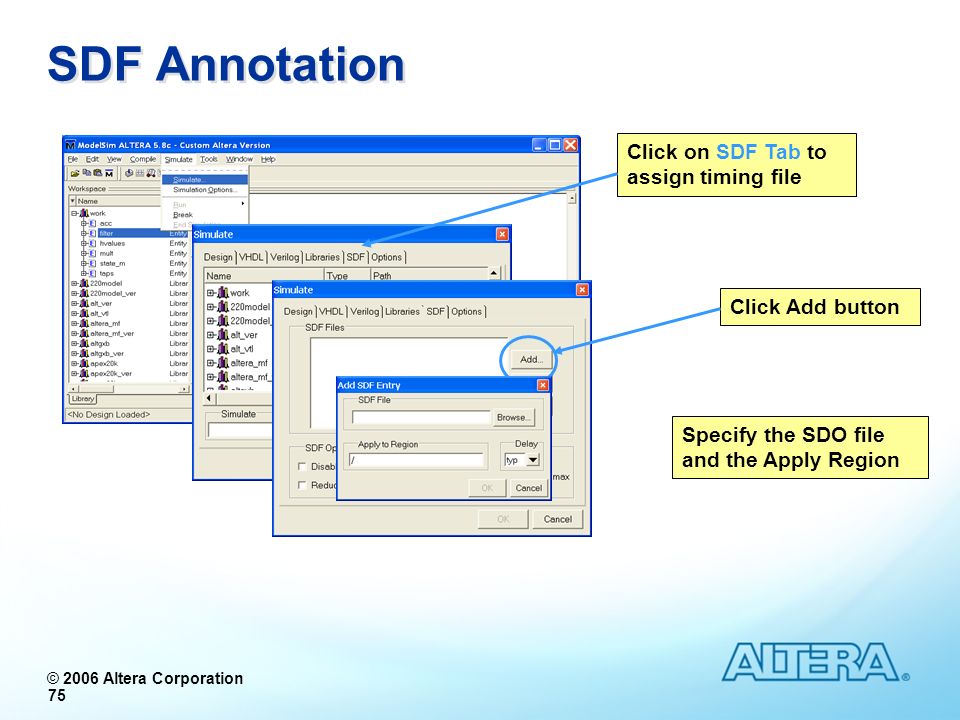

#Add float32 to modelsim 10 how to

See theįor more information on how to use mixed precision. 'mixed_bfloat16' to tf.keras.mixed_t_global_policy. Mixed precision can be enabled by passing 'mixed_float16' or This is why the term mixed_precision appears in theĪPI name.

Precision, which is the use of float16 or bfloat16 for computations andįloat32 for variables. Typically you only need to interact with dtype policies when using mixed 'mixed_float16' or 'mixed_bfloat16', which causes the compute dtype toīe float16 or bfloat16 and the variable dtype to be float32. The compute and variable dtypes will be that dtype. Canīe any dtype name, such as 'float32' or 'float64', which causes both

Model.add(Dense(32, input_dim=4, bias_initializer=Constant(value=0), kernel_initializer=Constant(value=1)))Ģ ops no flops stats due to incomplete shapes. 与 Keras 的结合 from keras import backend as K INFO:tensorflow:Converted 2 variables to const ops. With tf.gfile.GFile('graph.pb', "wb") as f:į.write(output_graph.SerializeToString())

Output_graph = graph_nvert_variables_to_constants(sess, graph.as_graph_def(), ) Params = tf.profiler.profile(graph, options=tf._variables_parameter())

0 kommentar(er)

0 kommentar(er)